Revolutionizing Biologics: How AI-Driven Excipient Selection is Transforming Enzyme Formulation Stability

This article provides a comprehensive overview of the paradigm shift towards artificial intelligence (AI) in the selection of excipients for enzyme-based drug formulations.

Revolutionizing Biologics: How AI-Driven Excipient Selection is Transforming Enzyme Formulation Stability

Abstract

This article provides a comprehensive overview of the paradigm shift towards artificial intelligence (AI) in the selection of excipients for enzyme-based drug formulations. It explores the foundational principles of enzyme-excipient interactions and the limitations of traditional trial-and-error approaches. We detail the methodological frameworks of machine learning (ML) and deep learning models that predict excipient efficacy for stabilizing enzymes against aggregation, denaturation, and loss of activity. The discussion extends to troubleshooting common formulation challenges and optimizing protocols using AI-guided Design of Experiments (DoE). Finally, we present validation strategies and comparative analyses demonstrating the superior performance, speed, and cost-effectiveness of AI-driven methods versus conventional techniques, highlighting their transformative potential for accelerating the development of robust and stable biotherapeutics.

The Enzyme Stability Challenge: Why Traditional Excipient Selection Falls Short

Within the paradigm of AI-driven excipient selection for enzyme formulation research, the inherent instability of enzyme therapeutics presents a major development challenge. Excipients are not inert fillers but critical stabilizers that protect against denaturation, aggregation, and deactivation. This document details the quantitative impact of excipients and provides standardized protocols for empirical validation of AI-generated excipient hypotheses.

Quantitative Impact of Common Excipient Classes on Enzyme Stability

The following table synthesizes recent data (2023-2024) on the stabilizing efficacy of various excipient classes for model enzymes (e.g., Lysozyme, Lactate Dehydrogenase, β-Galactosidase) under accelerated stability conditions (40°C/75% RH for 4 weeks).

Table 1: Stabilizing Efficacy of Excipient Classes on Enzyme Activity Retention

| Excipient Class | Example Compounds | Typical Conc. Range | Mean Activity Retention (%) | Primary Stabilization Mechanism |

|---|---|---|---|---|

| Sugars | Trehalose, Sucrose | 5-10% (w/v) | 85 ± 6 | Water replacement, Vitrification |

| Polyols | Sorbitol, Glycerol | 5-15% (w/v) | 72 ± 9 | Preferential exclusion, Kosmotrope |

| Amino Acids | Glycine, Arginine | 50-200 mM | 78 ± 7 (Arg: 88 ± 4) | Specific ionic interactions, Suppress aggregation |

| Polymers | PEG-4000, HPMC | 0.1-1% (w/v) | 65 ± 10 (PEG: 80 ± 5) | Steric hindrance, Surface adsorption |

| Surfactants | Polysorbate 80 | 0.01-0.1% (w/v) | 90 ± 3 | Interface protection, Prevent surface adsorption |

| Buffers | Histidine, Citrate | 20-50 mM | Varies by pH optimum | pH control, Ionic strength modulation |

Data compiled from recent publications in Int. J. Pharm., J. Pharm. Sci., and Mol. Pharmaceutics.

Table 2: AI-Predicted vs. Empirical Stability for Novel Excipient Combinations

| Enzyme Target | AI-Proposed Excipient Cocktail (via QSAR Model) | Predicted Activity Retention at 4 Weeks | Empirically Measured Retention | Key Stability Indicator Measured |

|---|---|---|---|---|

| Protease A | 100 mM Arginine, 5% Trehalose, 0.03% PS80 | 94% | 91 ± 2% | Aggregation (SEC-HPLC), Residual Activity |

| Oxidase B | 200 mM Glycine, 2% Sorbitol, 50 mM Histidine Buffer | 87% | 82 ± 4% | Subvisible Particles (Microflow Imaging), Kinetic Assay |

| Kinase C | 10% Sucrose, 0.1% HPMC, 1 mM EDTA | 89% | 85 ± 3% | Secondary Structure (CD Spectroscopy), Thermal Shift (Tm) |

Experimental Protocols for Excipient Validation

Protocol 1: High-Throughput Screening of Excipient Libraries on Enzyme Stability

Objective: To empirically test the stabilizing effect of AI-proposed excipient candidates in a microplate format. Materials: See "The Scientist's Toolkit" below. Workflow:

- Formulation Preparation:

- Prepare 96-well master plates with varying excipient solutions in your desired buffer (e.g., 20 mM Histidine, pH 6.0). Use a liquid handler for reproducibility.

- Add a fixed volume of concentrated enzyme stock to each well to achieve target concentration (e.g., 1 mg/mL). Mix gently via plate shaking.

- Stress Induction:

- Seal plates and subject to accelerated thermal stress (e.g., 40°C) in a thermally controlled incubator for a defined period (e.g., 7 days).

- Include control plates stored at recommended long-term conditions (e.g., 4°C or -80°C).

- Stability Assessment:

- Activity Assay: At designated time points, transfer aliquots to an assay plate containing specific substrate. Monitor product formation kinetically using a plate reader.

- Aggregation Detection: Use a plate-based static light scattering (SLS) measurement at 350 nm to quantify high molecular weight species.

- Data Analysis:

- Normalize all activity/aggregation data to the unstressed (4°C) control.

- Calculate percentage activity retention and aggregation index. Use Z' factor to validate assay robustness for HTS.

Protocol 2: Structural Integrity Analysis via Spectroscopic Techniques

Objective: To correlate excipient-mediated stability with changes in enzyme secondary/tertiary structure. Part A: Circular Dichroism (CD) Spectroscopy

- Prepare enzyme samples (0.2 mg/mL) in the presence/absence of lead excipients post-stress.

- Load samples into a quartz cuvette (path length 0.1 cm for far-UV).

- Record far-UV spectra (190-250 nm) at 20°C with appropriate averaging. Subtract buffer/excipient baseline.

- Analyze mean residue ellipticity. Use algorithms (e.g., SELCON3) to deconvolute percentages of α-helix, β-sheet, and random coil. Part B: Intrinsic Fluorescence Spectroscopy

- Use same sample set as CD. Set excitation to 280 nm (for Trp/Tyr) or 295 nm (Trp only).

- Record emission spectrum from 300-400 nm.

- Monitor shifts in λmax (indicative of Trp microenvironment changes) and fluorescence intensity (quenching due to aggregation).

Visualization of Concepts and Workflows

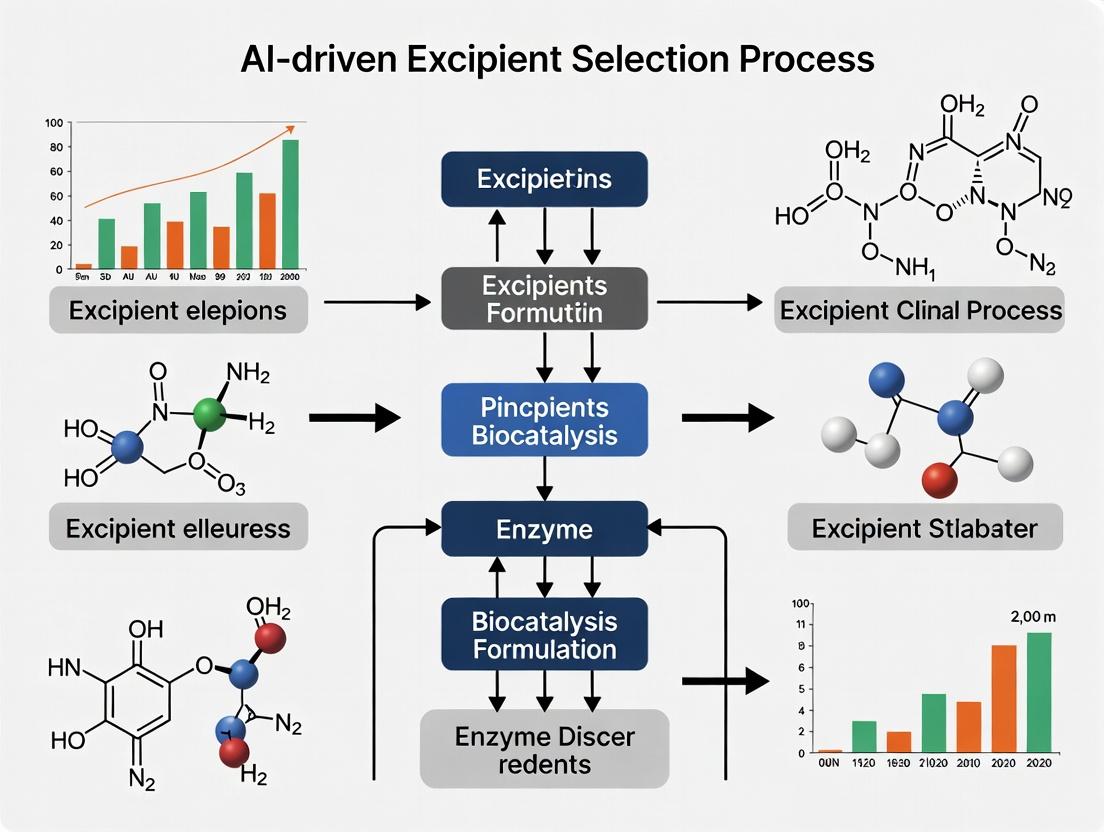

Diagram Title: AI-Driven Excipient Screening Cycle

Diagram Title: Enzyme Degradation Pathways & Excipient Action

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Materials for Enzyme Stability Research

| Item | Example Product/Catalog | Primary Function in Protocol |

|---|---|---|

| Enzyme Standards | Lysozyme (L6876, Sigma), Lactate Dehydrogenase (L2500, Sigma) | Model proteins for method development and control studies. |

| Excipient Library | Hampton Research Excipient Screen (HR2-428), Sigma Biologics Excipients | Pre-formulated, high-purity compounds for systematic screening. |

| Microplate Assay Kits | ThermoFisher EnzCheck (E6638), Promega Nano-Glo | Fluorogenic/Chromogenic substrates for rapid activity quantification. |

| Dynamic/Static Light Scattering | Malvern Zetasizer Ultra, Wyatt DynaPro Plate Reader III | Measures hydrodynamic radius, aggregation, and thermal unfolding (Tm). |

| Circular Dichroism Spectrometer | Jasco J-1500, Applied Photophysics Chirascan | Quantifies secondary structure changes in far-UV region. |

| Fluorescence Spectrometer | Horiba Fluorolog, Agilent Cary Eclipse | Monitors tertiary structure via intrinsic Trp/Tyr fluorescence. |

| Stability Storage Chambers | Cytiva Bioprocess Containers, CMS Incubated Shakers | Provides controlled stress environments (temperature, agitation). |

| AI/Data Analysis Software | Schrodinger LiveDesign, Dotmatics, Python (scikit-learn, TensorFlow) | Platform for QSAR modeling, data integration, and predictive analytics. |

Within AI-driven excipient selection for enzyme formulation research, understanding the fundamental physical and chemical degradation pathways is paramount. Enzymes, as proteinaceous therapeutics, are susceptible to multiple instability mechanisms during manufacturing, storage, and delivery. Aggregation (non-native protein-protein interactions), denaturation (loss of native structure), and surface adsorption (loss to interfaces) represent the primary challenges that formulation scientists must mitigate. These pathways lead to a loss of biological activity, altered pharmacokinetics, and potential immunogenicity. This Application Note details experimental protocols to characterize these instability mechanisms, providing the quantitative data required to train and validate AI models for predictive excipient discovery.

Quantitative Data on Enzyme Instability

Table 1: Common Stress Conditions and Resultant Enzyme Instability Profiles

| Stress Condition | Primary Instability Mechanism | Typical Impact on Activity (%) | Key Analytical Readout |

|---|---|---|---|

| Agitation (Shear) | Surface Adsorption & Aggregation | 40-80% loss | Turbidity (A340), SEC-HPLC |

| Thermal (40-60°C) | Denaturation & Aggregation | 60-100% loss | Intrinsic Fluorescence, DSC (Tm) |

| Freeze-Thaw Cycling | Surface-Induced Denaturation | 20-50% loss | Activity Assay, Subvisible Particles |

| Low pH (pH 3-5) | Acid-Induced Denaturation | Varies widely | Far-UV CD, Trp Fluorescence |

| High Concentration | Concentration-Dependent Aggregation | 10-30% loss | Dynamic Light Scattering (DLS) |

Table 2: Exemplar Stabilizing Excipients and Their Proposed Mechanisms

| Excipient Class | Example | Primary Protective Mechanism | Target Instability Pathway |

|---|---|---|---|

| Sugar/Polyol | Trehalose, Sucrose | Preferential Exclusion, Vitrification | Denaturation, Surface Adsorption |

| Surfactant | Polysorbate 20, Poloxamer 188 | Competitive Interface Adsorption | Surface Adsorption, Aggregation |

| Amino Acids | Arginine, Glycine | Complex (can stabilize or destabilize) | Aggregation |

| Salts | MgSO4, (NH4)2SO4 | Ionic Strength Modulation, Specific Binding | Denaturation |

| Polymers | PEG, HPMC | Steric Hindrance, Increased Viscosity | Aggregation, Surface Adsorption |

Experimental Protocols

Protocol 1: Forced Degradation via Agitation to Study Aggregation & Surface Adsorption

Objective: To induce and quantify subvisible particle formation and activity loss due to interfacial stress. Materials: Enzyme of interest, formulation buffer, magnetic stir plate & micro stir bars, hydrophobic (e.g., polypropylene) vials, dynamic light scattering (DLS) instrument, microplate reader. Procedure:

- Prepare 1 mL of enzyme formulation at a target concentration (e.g., 1 mg/mL) in a low-protein-binding microcentrifuge tube.

- Transfer 500 µL to a 2 mL polypropylene vial containing a 5x2 mm micro stir bar.

- Place the vial on a magnetic stir plate inside a controlled-temperature incubator (e.g., 25°C). Stir at 1000 rpm for 120 minutes.

- At t=0, 30, 60, and 120 minutes, remove 50 µL aliquots.

- Activity Assay: Immediately dilute aliquot into activity assay buffer and measure initial reaction velocity.

- DLS: Dilute aliquot 1:10 in filtered formulation buffer and measure hydrodynamic radius (Rh) and polydispersity index (PDI).

- Turbidity: Measure absorbance at 340 nm (A340) of the undiluted aliquot.

- Data Analysis: Plot % initial activity, Rh, PDI, and A340 versus time. Correlate activity loss with increases in Rh and turbidity.

Protocol 2: Thermal Denaturation Monitored by Intrinsic Fluorescence

Objective: To determine the melting temperature (Tm) and profile of enzyme unfolding. Materials: Enzyme sample, fluorescent plate reader with thermal gradient control, black 96- or 384-well plates. Procedure:

- Prepare enzyme sample in formulation buffer at ~0.1-0.2 mg/mL. Filter through a 0.22 µm filter.

- Pipette 50 µL of sample into multiple wells of a black-walled plate. Include a blank (buffer only).

- Seal the plate with an optical seal.

- Configure the plate reader: Excitation = 280 nm, Emission = 320-380 nm (scan or use 340 nm). Set a thermal ramp from 25°C to 95°C at a rate of 1°C/min, with a fluorescence reading every 1°C.

- Run the assay.

- Data Analysis: Plot fluorescence intensity (or λmax shift) vs. temperature. Fit the sigmoidal curve to a Boltzmann equation or calculate the first derivative to determine the apparent Tm. This serves as a key stability parameter for AI model input.

Protocol 3: Static Incubation for Surface Adsorption Quantification

Objective: To measure loss of enzyme due to adsorption to container surfaces. Materials: Enzyme formulation, different material vials (e.g., glass, polypropylene, siliconized glass), HPLC system with UV detection. Procedure:

- Prepare a concentrated enzyme stock solution and dilute into the desired formulation buffer to the target concentration (e.g., 0.1 mg/mL).

- Aliquot 1 mL of the solution into triplicate vials of each material type. Fill the vials to the same headspace ratio.

- Incubate vials quiescently at the target temperature (e.g., 5°C and 25°C) for 24-72 hours.

- At each time point, gently invert each vial 3 times to mix. Carefully remove a 100 µL aliquot from the center of the solution, avoiding the walls and meniscus.

- Quantify the remaining soluble protein concentration using a validated reverse-phase or size-exclusion HPLC method.

- Data Analysis: Calculate % recovery relative to the t=0 control. Plot % recovery vs. time for each material and temperature.

Visualization Diagrams

Diagram 1: Primary Enzyme Instability Pathways

Title: Enzyme Degradation Pathways

Diagram 2: AI-Driven Formulation Workflow

Title: AI Excipient Discovery Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Enzyme Stability Studies

| Item | Function in Stability Studies | Example Product/Criteria |

|---|---|---|

| Low-Protein-Binding Tubes/Vials | Minimizes non-specific surface adsorption loss during sample handling. | Polypropylene tubes; Siliconized glass vials. |

| Non-Ionic Surfactant (e.g., Polysorbate 20/80) | Competitive inhibitor of surface adsorption at air-liquid and solid interfaces. | Pharmaceutical grade, low peroxide/peroxide-free. |

| Stabilizing Sugars (Lyoprotectants) | Protects against thermal and freeze-induced denaturation via preferential exclusion. | Trehalose, Sucrose (high purity, endotoxin-controlled). |

| Dynamic Light Scattering (DLS) Instrument | Measures hydrodynamic size and detects submicron aggregates in solution. | Z-average size, PDI, and size distribution profiles. |

| Differential Scanning Calorimetry (DSC) | Directly measures thermal unfolding temperature (Tm) and enthalpy. | Microcalorimeter with high-sensitivity cell. |

| Intrinsic Fluorescence Spectrometer | Probes conformational changes via tryptophan environment sensitivity. | Plate reader with thermal control or cuvette-based. |

| Size-Exclusion HPLC (SEC-HPLC) | Quantifies soluble monomer loss and aggregate/ fragment formation. | Column with appropriate separation range (e.g., <1-500 kDa). |

| Forced Degradation Chamber | Provides controlled, reproducible stress conditions (temp, agitation, light). | Incubator shaker with precise rpm and temperature control. |

Within the broader thesis on AI-driven excipient selection for enzyme formulation research, this document details the traditional, empirical approach. This process, characterized by iterative physical experimentation, remains a bottleneck in biopharmaceutical development, consuming significant resources before identifying optimal stabilizers for enzyme-based therapeutics.

The Cost of Tradition: Quantitative Analysis

The following table summarizes the resource expenditure associated with traditional excipient screening for a single enzyme formulation project, based on current industry and academic benchmarks.

Table 1: Estimated Resource Allocation for Traditional Empirical Excipient Screening

| Resource Category | Estimated Quantity/Cost | Time Allocation | Primary Function |

|---|---|---|---|

| Excipient Library | 50-200 unique compounds | N/A | Provide a broad chemical space for initial screening (buffers, sugars, polyols, polymers, surfactants). |

| Enzyme API | 100-500 mg | N/A | The active pharmaceutical ingredient requiring stabilization. |

| Laboratory Materials (vials, plates, buffers) | $2,000 - $5,000 | N/A | Consumables for sample preparation and storage. |

| High-Throughput Screening (HTS) Assays | 1,500 - 5,000 discrete samples | 2-4 weeks | Initial assessment of activity and aggregation. |

| Analytical Characterization (DSC, DLS, CD, HPLC) | 200 - 500 samples | 4-8 weeks | In-depth stability profiling (thermal, conformational, colloidal). |

| Formulation Scientist FTE | 0.5 - 1.5 Full-Time Equivalent | 3-6 months | Design, execute, and analyze experiments. |

| Total Project Duration | N/A | 6-9 months | From initial design to lead excipient candidate identification. |

| Total Direct Cost | $50,000 - $150,000 | N/A | Excluding capital equipment and overhead. |

Detailed Experimental Protocols

Protocol 1: High-Throughput Excipient Screening for Enzyme Stability

Objective: To rapidly identify excipients that preserve enzymatic activity after a stress condition (e.g., thermal stress).

Materials: See "The Scientist's Toolkit" below. Procedure:

- Excipient Stock Preparation: Prepare 1M stock solutions of all candidate excipients (e.g., trehalose, sucrose, sorbitol, arginine, Polysorbate 80) in the primary formulation buffer. Filter sterilize (0.22 µm).

- 96-Well Plate Setup: In a 96-well plate, use a liquid handler to dispense buffer and excipient stocks to create a final volume of 90 µL per well with excipient concentrations spanning 0-500 mM (or 0-0.1% for surfactants). Include buffer-only controls.

- Enzyme Dosing: Add 10 µL of enzyme stock solution to each well (final concentration 0.1-1 mg/mL). Mix thoroughly via plate shaking.

- Stress Application: Seal the plate and incubate in a thermocycler or stable incubator at a stress temperature (e.g., 40°C or 50°C) for 24 hours. A control plate is stored at 4°C.

- Activity Assay: Cool the plate to assay temperature (e.g., 25°C). Add enzyme-specific substrate to each well. Continuously monitor product formation (e.g., absorbance, fluorescence) for 10-30 minutes using a plate reader.

- Data Analysis: Calculate residual activity (%) for each well relative to the non-stressed control. Excipients yielding >90% residual activity are selected for secondary analysis.

Protocol 2: Forced Degradation and Long-Term Stability Study

Objective: To evaluate the physical and chemical stability of lead formulations under accelerated conditions.

Materials: See "The Scientist's Toolkit" below. Procedure:

- Formulation: Prepare 1 mL of the top 5-10 candidate formulations (from Protocol 1) and a buffer control at the target enzyme concentration (e.g., 1 mg/mL). Filter (0.22 µm) into 2 mL sterile glass vials.

- Study Design: Place triplicate vials of each formulation into stability chambers at:

- 2-8°C (reference condition)

- 25°C / 60% RH (accelerated)

- 40°C / 75% RH (stress)

- Sampling: Remove one vial from each condition at predetermined time points (e.g., 0, 1, 2, 4, 8, 12 weeks).

- Analysis Suite:

- Size-Exclusion HPLC (SE-HPLC): Quantify soluble aggregate and monomer content.

- Dynamic Light Scattering (DLS): Measure hydrodynamic radius and polydispersity index.

- Differential Scanning Calorimetry (DSC): Determine the enzyme's thermal unfolding midpoint (Tm) in each formulation.

- Visual Inspection: Note any precipitation or color change.

- Stability Modeling: Use Arrhenius or other models to extrapolate long-term stability at recommended storage temperatures (e.g., 2-8°C).

Visualizations

Traditional Excipient Screening Workflow

Enzyme Degradation Pathways Under Stress

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Materials for Traditional Excipient Screening Experiments

| Item Name | Function in Experiment |

|---|---|

| Excipient Library (Pharma Grade) | Provides a defined, high-purity set of GRAS (Generally Recognized as Safe) compounds for screening, ensuring regulatory relevance. |

| Enzyme-Specific Fluorogenic/Kinetic Assay Kit | Enables high-throughput, sensitive quantification of enzymatic activity in 96- or 384-well plate formats for rapid excipient ranking. |

| Size-Exclusion HPLC (SE-HPLC) Column | Separates and quantifies monomeric enzyme from higher-order soluble aggregates, a critical quality attribute for formulation stability. |

| Dynamic Light Scattering (DLS) Plate Reader | Allows rapid, low-volume measurement of hydrodynamic size and particle formation across hundreds of formulation samples. |

| Differential Scanning Calorimetry (DSC) Microcalorimeter | Measures the thermal unfolding temperature (Tm) of the enzyme, directly indicating excipient-induced conformational stabilization. |

| Forced Degradation/Stability Chambers | Provide controlled temperature and humidity environments for accelerated stability studies, predicting long-term shelf life. |

| Automated Liquid Handling Workstation | Enables precise, reproducible preparation of large excipient-enzyme formulation matrices, minimizing human error and variability. |

Within AI-driven excipient selection for enzyme formulation research, understanding the mechanistic roles of key excipient classes is paramount. Excipients are not inert; they are functional components that stabilize, buffer, and protect active enzymes from degradation during processing and storage. This application note details the modes of action, quantitative data, and experimental protocols for evaluating stabilizers, buffers, and surfactants, providing a foundational dataset for machine learning model training.

Stabilizers: Modes of Action and Application

Stabilizers protect enzyme conformation and prevent aggregation, surface adsorption, and chemical degradation (e.g., deamidation, oxidation). Their primary modes include preferential exclusion, vitrification, and specific binding.

Table 1: Common Stabilizers and Their Quantitative Effects on Enzyme Stability

| Stabilizer Class | Example Excipients | Typical Conc. Range | Primary Mode of Action | Measurable Outcome (Example) |

|---|---|---|---|---|

| Sugars | Sucrose, Trehalose | 5-15% (w/v) | Preferential Exclusion, Vitrification | ΔTm increase of 5-10°C |

| Polyols | Sorbitol, Glycerol | 5-20% (w/v) | Preferential Exclusion, Solvent Modifier | Reduction in aggregation by >50% |

| Amino Acids | Glycine, Arginine | 50-200 mM | Preferential Exclusion, Specific Ion Effects | Suppression of surface adsorption |

| Polymers | PEG 3350, HPMC | 0.1-1% (w/v) | Steric Stabilization, Viscosity Enhancer | Increased shelf-life by 2x |

| Proteins | HSA, Gelatin | 0.1-1% (w/v) | Competitive Adsorption, Molecular Chaperone | Recovery of activity >90% after shear stress |

Protocol 1.1: Differential Scanning Fluorimetry (DSF) to Determine Thermal Stabilization (Tm Shift) Objective: Quantify the stabilizing effect of an excipient on an enzyme's thermal denaturation midpoint (Tm). Materials: Purified enzyme, excipient stocks, SYPRO Orange dye, real-time PCR instrument. Procedure:

- Prepare a master mix of enzyme at 1-5 µM in a relevant buffer (e.g., 20 mM Histidine).

- Add SYPRO Orange dye to a final 5X concentration.

- Aliquot 20 µL of master mix into PCR plate wells. Add 5 µL of excipient solution or buffer control.

- Seal plate and centrifuge. Run DSF protocol from 25°C to 95°C with a gradual ramp (e.g., 1°C/min).

- Analyze raw fluorescence data. Determine Tm as the inflection point of the sigmoidal unfolding curve.

- Calculate ΔTm = Tm(with excipient) - Tm(control).

Buffers: Modes of Action and Application

Buffers maintain formulation pH, which is critical for enzyme protonation state, solubility, and catalytic activity. They can also directly interact with the protein surface.

Table 2: Common Buffers and Their Properties for Enzyme Formulations

| Buffer | pKa at 25°C | Useful pH Range | Key Consideration for Enzymes |

|---|---|---|---|

| Citrate | 3.13, 4.76, 6.40 | 3.0-6.2 | Chelating agent, may affect metalloenzymes |

| Histidine | 1.82, 6.04, 9.09 | 5.5-7.0 | Low temperature coefficient, common in mAbs |

| Phosphate | 2.15, 7.20, 12.38 | 6.2-8.2 | Can precipitate with divalent cations |

| Tris | 8.06 | 7.0-9.0 | Significant temperature and concentration effects |

| Succinate | 4.21, 5.64 | 4.0-6.0 | Can participate in biological reactions |

Protocol 2.1: pH-Rate Profile Analysis for Buffer Selection Objective: Determine the optimal pH for enzyme stability and identify appropriate buffer systems. Materials: Enzyme, buffers covering pH 3-9 (e.g., citrate, phosphate, Tris), activity assay reagents. Procedure:

- Prepare 0.1 M buffer solutions across the target pH range, adjusting with HCl/NaOH.

- Incubate enzyme (at low concentration) in each buffer at 4°C and 25°C. Aliquot samples at t=0, 1, 3, 7 days.

- For each aliquot, immediately assay residual enzymatic activity under standard conditions.

- Plot % residual activity vs. pH. The optimal stability pH is where activity loss is minimal.

- Validate by conducting long-term stability studies at the selected pH/buffer.

Surfactants: Modes of Action and Application

Surfactants (non-ionic) primarily mitigate interfacial stress (air-liquid, solid-liquid) that leads to enzyme unfolding and aggregation. They form a protective layer at interfaces.

Table 3: Common Non-Ionic Surfactants in Enzyme Formulations

| Surfactant | Typical Conc. Range | HLB Value | Key Property & Consideration |

|---|---|---|---|

| Polysorbate 20 (PS20) | 0.001-0.1% (w/v) | 16.7 | CMC ~0.06 mM; susceptible to oxidation |

| Polysorbate 80 (PS80) | 0.001-0.1% (w/v) | 15.0 | CMC ~0.01 mM; less hydrophilic than PS20 |

| Poloxamer 188 | 0.001-0.1% (w/v) | 29.0 | CMC ~0.02 mM; low toxicity, often in biologics |

| Brij-35 | 0.001-0.05% (w/v) | 16.9 | CMC ~0.09 mM; very stable to oxidation |

Protocol 3.1: Agitation Stress Test to Evaluate Surfactant Protection Objective: Assess the ability of a surfactant to protect against air-liquid interfacial stress. Materials: Enzyme formulation with/without surfactant, orbital shaker, microcentrifuge tubes. Procedure:

- Prepare 1 mL samples of enzyme (e.g., 1 mg/mL) in primary container (e.g., 2 mL glass vial or microcentrifuge tube). Include control (no surfactant) and test (with 0.01-0.05% surfactant).

- Secure containers horizontally on an orbital shaker. Agitate at 250 rpm at 25°C for a defined period (e.g., 24h).

- At defined time points, remove samples and centrifuge briefly to settle any large bubbles.

- Analyze samples for: a) Sub-visible particles (via light obscuration or microflow imaging), b) Soluble aggregates (via SE-HPLC), c) Residual activity.

- Calculate % protection = [1 - (Activity loss with surfactant / Activity loss without surfactant)] x 100.

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Materials for Excipient-Efficacy Experiments

| Item | Function & Application |

|---|---|

| Real-time PCR instrument with FRET capability | For running DSF/meltscan assays to measure thermal stability (Tm). |

| SYPRO Orange dye | Environment-sensitive fluorescent probe for DSF; binds hydrophobic patches exposed upon unfolding. |

| Microflow Imaging (MFI) Particle Analyzer | Quantifies and images sub-visible particles (2-100 µm) resulting from aggregation stress. |

| Size-Exclusion High-Performance Liquid Chromatography (SE-HPLC) | Separates and quantifies monomer, fragments, and soluble aggregates in stressed samples. |

| Forced Degradation Chamber (e.g., with UV, temperature control) | Provides controlled stress conditions (light, heat) for accelerated stability studies. |

| Dynamic Light Scattering (DLS) Instrument | Measures hydrodynamic radius and polydispersity index for early aggregation detection. |

AI Integration Workflow

Title: AI-Driven Excipient Selection Workflow

Modes of Action Diagram

Title: Excipient Classes Combat Enzyme Degradation Pathways

Within the specialized field of enzyme stabilization for biologics, excipient selection remains a critical, yet empirically driven challenge. The broader thesis posits that AI-driven approaches can systematically deconvolute excipient-enzyme interactions, moving formulation from an art to a predictive science. A primary pillar of this thesis is the utilization of historical formulation data—stability studies, spectroscopic analyses, and activity assays—as a foundational training set for machine learning models. This application note details protocols for curating, processing, and leveraging this "goldmine" to train models for predictive excipient selection.

Data Curation and Preprocessing Protocols

Protocol 2.1: Data Aggregation from Historical Stability Studies

Objective: To compile a unified dataset from disparate historical sources (electronic lab notebooks, LIMS, published literature).

Materials & Workflow:

- Source Identification: Locate data from:

- Forced degradation studies (thermal, pH, shear stress).

- Long-term real-time stability studies.

- Accelerated stability studies.

- Key Data Extraction: For each formulation condition, extract:

- Enzyme Parameters: Name, source, concentration, initial activity (IU/mg).

- Formulation Parameters: Buffer identity, pH, ionic strength.

- Excipient Parameters: Identity, concentration, functional class (sugar, polyol, amino acid, surfactant, polymer).

- Stability Metrics: % Activity remaining over time (t~1~, t~2~, t~final~), aggregation percentage (by SEC-HPLC or DLS), sub-visible particle count.

- Environmental Conditions: Storage temperature (°C), relative humidity (%).

- Normalization: Normalize all activity and concentration data to a standard unit (e.g., IU/mL, mg/mL, molarity). pH and temperature are absolute.

Output: A structured .csv or relational database table.

Protocol 2.2: Data Cleaning and Feature Engineering

Objective: To transform raw historical data into a clean, feature-rich dataset suitable for ML training.

Methodology:

- Handling Missing Data: For continuous variables (e.g., missing activity at a time point), use k-nearest neighbors (k=5) imputation based on similar formulation conditions. Categorical missing data (e.g., excipient class) flagged as "Unknown."

- Outlier Detection: Apply the Interquartile Range (IQR) method to stability metrics. Data points >1.5*IQR above the 75th percentile or below the 25th percentile are reviewed for experimental error; if none is found, they are retained but flagged.

- Feature Engineering:

- Create interaction terms between primary excipient and pH.

- Calculate derived stability metrics: Degradation rate constant (k) assuming pseudo-first-order kinetics, t~90~ (time to 90% activity remaining).

- Encode excipients using molecular fingerprints (e.g., Morgan fingerprints via RDKit) for structure-aware models.

Output: A cleaned, augmented feature matrix (X) and target vector (y), e.g., y = degradation rate constant (k) or categorical stability label.

Table 1: Summary of Historical Formulation Dataset Composition

| Data Category | Number of Records | Key Parameters | Primary Source |

|---|---|---|---|

| Lysozyme Stability | 1,240 | pH (3-9), Temp (4-60°C), 12 excipients | Internal ELN (2015-2023) |

| Monoclonal Antibody (mAb) Aggregation | 3,560 | Ionic strength, Sucrose (0-10%), Surfactant type | Published literature meta-analysis |

| Protease Activity Retention | 890 | Shear stress cycles, Polyol concentration | Collaborator dataset |

| Overall Compiled Dataset | 5,690 | 45 unique excipients, 5 enzyme classes | Composite |

Table 2: Exemplar Stability Outcomes from Historical Data (Lysozyme, 40°C)

| Formulation Code | pH | Primary Excipient (Conc.) | Degradation Rate Constant k (day⁻¹) | t~90~ (days) | Final Aggregation (%) |

|---|---|---|---|---|---|

| LYS_01 | 4.5 | Sucrose (5% w/v) | 0.0051 | 20.6 | 2.1 |

| LYS_02 | 4.5 | Sorbitol (5% w/v) | 0.0078 | 13.5 | 3.8 |

| LYS_03 | 7.4 | Sucrose (5% w/v) | 0.0214 | 4.9 | 15.7 |

| LYS_04 | 7.4 | Histidine (20 mM) | 0.0123 | 8.5 | 8.2 |

| LYS_05 (Control) | 7.4 | None | 0.0450 | 2.3 | 32.5 |

AI Model Training and Validation Protocol

Protocol 4.1: Building a Predictive Model for Excipient Efficacy

Objective: To train a supervised ML model that predicts a stability metric (y) from formulation features (X).

Experimental Workflow:

Diagram Title: AI Model Training Workflow for Formulation Prediction

Detailed Methodology:

- Data Partitioning: Perform an 80/20 stratified split on the primary enzyme class to ensure representation in both training and test sets.

- Model Selection & Training: Implement a comparative study using Scikit-learn:

- Random Forest Regressor (for continuous k or t~90~).

- Gradient Boosting Regressor (e.g., XGBoost).

- Multi-layer Perceptron (Neural Network).

- Baseline: Simple linear regression using excipient concentration as the sole feature.

- Hyperparameter Tuning: Use 5-fold cross-validated grid search over key parameters (e.g.,

n_estimators,max_depthfor RF;learning_ratefor XGBoost). - Validation Metrics:

- For Regression (k, t~90~): Mean Absolute Error (MAE), R² score.

- For Classification (Stable/Unstable): F1-score, Precision-Recall AUC.

- Interpretability: Apply SHAP (Shapley Additive exPlanations) to identify top excipient features driving stability predictions.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Validating AI-Predicted Formulations

| Item | Function in Validation Protocol | Example Product/Catalog |

|---|---|---|

| Differential Scanning Calorimetry (DSC) | Measures thermal unfolding temperature (T~m~), a key stability indicator of excipient effect on protein. | Nano DSC, TA Instruments |

| Dynamic Light Scattering (DLS) | Assesses colloidal stability (hydrodynamic radius, polydispersity) to predict aggregation propensity. | Zetasizer Ultra, Malvern Panalytical |

| Size-Exclusion HPLC (SEC-HPLC) | Quantifies soluble aggregate and fragment formation in stability samples. | Agilent 1260 Infinity II, TSKgel G3000SWxl column |

| Activity Assay Kit | Enzyme-specific fluorometric or colorimetric kit to measure functional activity retention. | EnzCheck Protease Assay Kit, Thermo Fisher |

| Forced Degradation Chamber | Provides controlled stress (temperature, humidity, light) for accelerated stability testing. | CTS C40 Climate Chamber, Weiss Technik |

| Molecular Visualization & Cheminformatics Software | Generates excipient fingerprints and analyzes structure-property relationships. | RDKit (Open Source), Schrodinger Maestro |

Logical Framework: From Data to Decision

Diagram Title: AI-Driven Excipient Selection Thesis Framework

AI in Action: Building and Deploying Predictive Models for Smart Formulation

Application Notes: AI-Driven Excipient Selection for Enzyme Stabilization

Comparative Analysis of AI Approaches

The selection of optimal stabilizing excipients for enzyme formulations is a complex, multi-parameter problem. AI tools accelerate this process by modeling non-linear relationships between excipient properties, environmental conditions, and enzyme stability metrics.

Table 1: Comparison of ML vs. DL for Formulation Tasks

| Feature | Traditional Machine Learning (ML) | Deep Learning (DL) |

|---|---|---|

| Optimal Data Size | 10s-100s of formulations | 1000s+ of formulations |

| Input Data Type | Structured (e.g., RDKit descriptors, Hansen parameters) | Structured & Unstructured (e.g., molecular graphs, spectral data) |

| Typical Model | Random Forest, Gradient Boosting, SVM | Graph Neural Networks (GNNs), Convolutional Neural Networks (CNNs) |

| Interpretability | High (Feature importance scores) | Lower (Requires explainable AI techniques) |

| Compute Demand | Moderate | High (GPU often required) |

| Key Strength | Predictive modeling with limited datasets, rapid iteration | Learning complex patterns from high-dimensional raw data |

| Formulation Use Case | Predict stability score from excipient properties | Predict binding affinity from 3D molecular structure |

Table 2: Performance Metrics on Excipient Efficacy Prediction

| Model | Dataset Size | Prediction Target | R² Score | Mean Absolute Error (MAE) |

|---|---|---|---|---|

| Random Forest | 150 formulations | Residual Activity (%) after 30 days | 0.87 | ± 5.2% |

| XGBoost | 150 formulations | Glass Transition Temperature (Tg) | 0.91 | ± 2.1 °C |

| Graph Neural Network | 12,000 molecule graphs | Excipient-Enzyme Binding Energy | 0.79 | ± 0.8 kcal/mol |

| 1D-CNN | 800 FTIR spectra | Secondary Structure Loss | 0.83 | ± 3.7% |

Experimental Protocols

Protocol 1: ML-Based Screening with Limited Dataset

Objective: Predict thermal stability enhancement (%) of a protease using a library of 20 excipients.

Materials:

- Enzyme and substrate.

- Excipient library (sugars, polyols, amino acids, polymers).

- Microplate reader with temperature control.

- Python environment with scikit-learn, pandas, RDKit.

Procedure:

- Dataset Curation: For each excipient at 3 concentrations, measure:

T_m(Melting temperature via DSF)Residual Activityafter incubation at 50°C for 1 hour.

- Feature Engineering: Compute 200+ molecular descriptors for each excipient using RDKit (e.g., logP, hydrogen bond donors/acceptors, topological surface area).

- Model Training: Split data (80/20 train/test). Train a Random Forest Regressor to predict

Residual Activityusing excipient descriptors and concentration as features. - Validation: Validate model on test set. Use permutation importance to identify key excipient properties driving stability.

Protocol 2: DL-Driven Molecular Interaction Prediction

Objective: Use a Graph Neural Network (GNN) to predict interaction strength between an enzyme surface and potential excipient molecules.

Materials:

- Public molecular database (e.g., PubChem, ChEMBL).

- Enzyme 3D structure (from PDB).

- High-performance computing cluster with GPU support.

- PyTor/PyTorch Geometric environment.

Procedure:

- Data Generation: Create a dataset of known protein-ligand complexes with binding affinity (Kd) labels. Represent each molecule as a graph (nodes=atoms, edges=bonds).

- Model Architecture: Implement a GNN where node features include atom type, charge, and hybridization.

- Training: Train the GNN to classify/regress binding affinity. Use a separate test set of known stabilizer-enzyme pairs.

- Screening: Apply the trained model to a virtual library of GRAS (Generally Recognized As Safe) excipients, ranked by predicted interaction score.

Visualizations

Title: AI Tool Selection Workflow for Formulation

Title: ML Formulation Development Protocol

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for AI-Driven Formulation Experiments

| Item | Function in AI Formulation Research |

|---|---|

| High-Throughput DSF Assay Kits | Generates thermal stability (Tm) data for hundreds of formulations, creating the primary dataset for ML training. |

| RDKit Open-Source Toolkit | Calculates quantitative molecular descriptors (e.g., solubility parameters, charge) for excipients, used as ML model features. |

| Simulated Intestinal/Gastric Fluid | Provides biologically relevant stress conditions for stability testing, ensuring predictive models reflect in vivo performance. |

| Lyophilizer with 96-well capability | Enables preparation of solid dosage forms from micro-formulations for long-term stability studies, expanding data dimensions. |

| Graph Neural Network Library (PyTorch Geometric) | Allows construction of DL models that directly process excipient molecular graphs to predict protein-excipient interactions. |

| Public Protein Data Bank (PDB) Files | Source of 3D enzyme structures for in silico docking studies and for generating inputs for DL models predicting binding sites. |

Application Notes

Within AI-driven excipient selection for enzyme formulation research, raw excipient data is heterogeneous and unstructured. Effective curation and feature engineering transform this data into predictive model inputs that capture physicochemical, interactional, and stability-modifying properties. The primary data domains include:

- Physicochemical Descriptors: Molecular weight, logP, topological polar surface area (TPSA), hydrogen bond donor/acceptor counts, viscosity, and refractive index.

- Interaction Fingerprints: Predicted or measured binding affinities to common enzyme motifs, surface tension modulation, and colloidal interaction parameters.

- Stability Indices: Empirical measures of stabilization (ΔTm, k degradation) from historical formulation studies, often sparse and requiring imputation.

Table 1: Curated Quantitative Excipient Property Domains for Feature Engineering

| Property Domain | Example Features | Typical Units/Range | Data Source |

|---|---|---|---|

| Molecular Physicochemistry | Molecular Weight, logP, TPSA, H-Bond Donors, Rotatable Bonds | Da, unitless, Ų, count, count | PubChem, ChemSpider, in silico calculation |

| Solution Behavior | Viscosity (concentration-dependent), Surface Tension, Refractive Index | cP, mN/m, unitless | Handbook data, experimental protocols |

| Protein Interaction Potential | Predicted ΔG binding (to model surfaces), Ionic Interaction Score, Hydrophobicity Index | kcal/mol, unitless, unitless | Molecular docking, sequence-based predictors |

| Empirical Stability Outcome | ΔTm (Stabilization), Aggregation Rate Reduction (%), Activity Retention (%) | °C, %, % (vs. control) | Historical formulation studies, literature mining |

Experimental Protocols

Protocol 1: High-Throughput Excipient-Enzyme Interaction Screening via Differential Scanning Fluorimetry (DSF) Objective: Generate empirical stability labels (ΔTm) for excipient-enzyme pairs to train and validate AI models. Materials: See The Scientist's Toolkit. Procedure:

- Prepare a master mix of the target enzyme in an appropriate buffer (e.g., 50 mM phosphate, pH 7.0) at a final concentration of 1-5 µM.

- Aliquot the master mix into a 96-well PCR plate. For each excipient, create a dilution series (e.g., 0, 0.1, 0.5, 1, 2% w/v or molar equivalents).

- Include Sypro Orange dye at a recommended 5X final concentration.

- Seal the plate and centrifuge briefly to eliminate bubbles.

- Run the DSF assay using a real-time PCR instrument with a temperature ramp from 25°C to 95°C at a rate of 1°C/min, with fluorescence measurements (ROX or HEX channel) taken at each interval.

- Analyze data by identifying the inflection point of the fluorescence curve (Tm) for each well using instrument software (e.g., Protein Thermal Shift Software). Calculate ΔTm as Tm(excipient) - Tm(control).

- Curate data into a structured table: Enzyme ID, Excipient ID, Concentration, Replicate Tm values, Mean ΔTm.

Protocol 2: Feature Generation from Chemical Structure Using Open-Source Descriptors Objective: Compute a standardized set of molecular descriptors for excipients from their SMILES strings. Procedure:

- Data Curation: Compile canonical SMILES for each excipient from reliable sources (e.g., PubChem API). Store in a table with Excipient ID and SMILES.

- Descriptor Calculation: Utilize the

rdkitPython library.

- Data Structuring: Execute function for each SMILES and populate a feature matrix. Normalize all features using StandardScaler or MinMaxScaler.

Visualization

Title: Data Pipeline for AI-Driven Excipient Selection

Title: DSF Protocol for Stability Feature Generation

The Scientist's Toolkit

Table 2: Key Research Reagent Solutions for Excipient Feature Engineering

| Item | Function in Protocol |

|---|---|

| Recombinant Target Enzyme | The protein of interest for which excipient stabilization is required. Provides the basis for all empirical interaction measurements. |

| Sypro Orange Dye | Fluorescent dye used in DSF (Protocol 1). Binds to hydrophobic patches exposed upon protein unfolding, reporting thermal denaturation. |

| 96-Well PCR Plates (Optical Grade) | Plate format compatible with real-time PCR instruments for high-throughput DSF assays. Must have optical clarity for fluorescence. |

| Real-Time PCR Instrument with Thermal Gradient | Equipment to precisely control temperature ramp and measure fluorescence, enabling automated Tm determination. |

| Chemical Descriptor Software (RDKit) | Open-source cheminformatics library used to calculate molecular features (e.g., logP, TPSA) directly from chemical structures (Protocol 2). |

| Excipient Library (USP/NF Grade) | A curated, chemically diverse set of approved excipients for systematic screening. Provides the base chemical space for model training. |

Application Notes

Within AI-driven excipient selection for enzyme formulation research, predictive models analyze complex datasets linking excipient properties (e.g., hydrophobicity, molecular weight, functional groups) to critical formulation outcomes such as enzyme stabilization, activity retention, and shelf-life. The choice of model architecture profoundly impacts prediction accuracy, interpretability, and computational cost.

Table 1: Comparison of Model Architectures for Excipient Selection

| Feature | Random Forest (RF) | Gradient Boosting (e.g., XGBoost) | Neural Network (NN) |

|---|---|---|---|

| Primary Strength | Robustness, interpretability via feature importance, less prone to overfitting. | High predictive accuracy, efficiency with mixed data types. | Captures complex non-linear and high-order interactions. |

| Key Weakness | Can miss subtle, complex relationships; less accurate than boosting on some tasks. | Requires careful hyperparameter tuning; can overfit if not regularized. | High data requirements; "black-box" nature; extensive computational needs. |

| Interpretability | Moderate (Feature importance scores, partial dependence). | Moderate (Feature importance, SHAP values). | Low (Requires post-hoc explainable AI methods). |

| Typical Performance (R² Range on Formulation Datasets) | 0.70 - 0.85 | 0.75 - 0.90 | 0.80 - 0.95+ (with sufficient data) |

| Best Suited For | Initial screening, identifying key excipient properties, datasets with <10k samples. | High-accuracy prediction for lead excipient identification. | Large-scale, high-dimensional data (e.g., from high-throughput screening). |

Table 2: Example Predictive Performance on Enzyme Stability Dataset

| Model | Mean Absolute Error (Activity Loss %) | R² Score | Key Predictive Features Identified |

|---|---|---|---|

| Random Forest | 8.5% | 0.82 | Excipient glass transition temp, hydrogen bonding capacity. |

| XGBoost | 6.2% | 0.89 | Excipient-enzyme binding free energy (predicted), log P. |

| Neural Network (2 hidden layers) | 5.1% | 0.93 | Non-linear interaction of polarity index & molecular weight. |

Experimental Protocols

Protocol 1: Building a Random Forest Model for Excipient Prescreening

- Objective: To identify the most influential molecular descriptors of excipients for enzyme thermal stability prediction.

- Dataset Preparation: Compile a dataset of 200+ excipients with features (molecular descriptors, physicochemical properties) and target variable (e.g., % enzyme activity after accelerated stability testing).

- Model Training: Using Scikit-learn, train a RandomForestRegressor. Set

n_estimators=200,max_features='sqrt', and userandom_statefor reproducibility. - Validation: Perform 5-fold cross-validation. Calculate mean R² and MAE across folds.

- Output Analysis: Extract and rank

feature_importances_. Plot partial dependence plots for top 3 features to visualize their effect on the predicted stability.

Protocol 2: Optimizing a Gradient Boosting Model with XGBoost for Formulation Prediction

- Objective: To achieve high-accuracy prediction of optimal excipient concentration ratios.

- Data Splitting: Split data into 70/15/15 for training, validation, and testing.

- Hyperparameter Tuning: Use Bayesian optimization (e.g.,

hyperoptlibrary) to tune:max_depth(3-10),learning_rate(0.01-0.3),n_estimators(100-500), andsubsample(0.7-1.0). Optimize for minimum MAE on the validation set. - Training & Regularization: Train the tuned model with early stopping (50 rounds) on the validation set to prevent overfitting.

- Interpretation: Calculate SHAP (SHapley Additive exPlanations) values to explain individual predictions and global feature impact.

Protocol 3: Training a Neural Network for High-Throughput Screening Data

- Objective: To model complex, non-linear relationships in large-scale excipient-enzyme compatibility matrices.

- Network Architecture: Design a feedforward network using PyTorch/TensorFlow: Input layer (matches # of features), 2-3 hidden layers (e.g., 128, 64 units) with ReLU activation, Dropout layers (rate=0.3), and a linear output layer.

- Training Procedure: Use Adam optimizer (lr=0.001), MSE loss, and batch sizes of 32. Train for 500 epochs, monitoring loss on a 20% validation split.

- Analysis: Apply sensitivity analysis on the trained model to probe the response of the prediction to variations in input features.

Mandatory Visualizations

Diagram 1: AI-Driven Excipient Selection Workflow

Diagram 2: Neural Network Architecture for Property Prediction

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Computational Tools for AI-Driven Formulation Research

| Tool/Reagent | Function in Research | Example/Provider |

|---|---|---|

| Molecular Descriptor Software | Generates quantitative features (e.g., logP, polar surface area) for excipients as model input. | RDKit, OpenBabel, MOE |

| Machine Learning Libraries | Provides implementations of RF, GB, and NN algorithms for model development. | Scikit-learn, XGBoost, LightGBM, PyTorch, TensorFlow |

| Hyperparameter Optimization Suites | Automates the search for optimal model settings to maximize performance. | Optuna, Hyperopt, Scikit-optimize |

| Model Interpretation Packages | Enables explanation of model predictions, crucial for scientific validation. | SHAP, LIME, ELI5 |

| High-Performance Computing (HPC) Resources | Accelerates training of complex models (especially NNs) on large datasets. | Local GPU clusters, Cloud services (AWS, GCP) |

The stability and efficacy of enzyme-based therapeutics are critically dependent on their formulation. Excipients—inactive components like stabilizers, buffers, and surfactants—play a vital role in protecting the enzyme from denaturation, aggregation, and degradation. Traditional excipient selection is empirical, time-consuming, and resource-intensive. This Application Note details a step-by-step workflow that integrates Artificial Intelligence (AI) prediction with experimental validation to rationally select excipients for enzyme formulation, accelerating the drug development pipeline.

The Integrated AI-to-Bench Workflow

The following diagram illustrates the core, iterative workflow for AI-driven excipient selection.

Title: AI to Lab Bench Workflow for Excipient Selection

Protocol: AI Model Training for Excipient Prediction

Objective

To train a machine learning (ML) model that predicts the stabilizing efficacy of excipients for a target enzyme under specific stress conditions.

- Data: Curated datasets from public repositories (e.g., USP Enzyme Stabilizer Database, published literature in PubMed).

- Features: Enzyme properties (molecular weight, isoelectric point), excipient properties (chemical class, molecular descriptors), stress conditions (temperature, pH).

- Label: Stabilization metric (e.g., percent activity remaining, aggregation index).

- Software: Python with libraries: scikit-learn, XGBoost, RDKit (for molecular featurization), pandas.

Procedure

- Data Collection & Cleaning: Extract data from sources into a structured table. Handle missing values (imputation or removal) and remove outliers.

- Feature Engineering: Calculate molecular descriptors for excipients using RDKit. Encode categorical variables (e.g., excipient class) using one-hot encoding.

- Model Selection & Training: Split data into training (80%) and test (20%) sets. Train multiple algorithms (e.g., Random Forest, Gradient Boosting, Neural Networks) using 5-fold cross-validation on the training set.

- Model Evaluation: Evaluate models on the held-out test set using metrics: Root Mean Square Error (RMSE), Mean Absolute Error (MAE), and R² score.

- Prediction: Use the best-performing model to predict stabilization scores for a novel list of excipients for your target enzyme. Rank excipients by predicted score.

Example Output Data

Table 1: Performance Metrics of Trained ML Models for Excipient Efficacy Prediction

| Model | RMSE (% Activity) | MAE (% Activity) | R² Score | Training Time (s) |

|---|---|---|---|---|

| Random Forest | 8.7 | 6.2 | 0.89 | 45 |

| XGBoost | 7.9 | 5.8 | 0.92 | 62 |

| Neural Network | 9.1 | 6.9 | 0.86 | 180 |

| Linear Regression | 15.4 | 12.1 | 0.55 | 2 |

Table 2: Top AI-Predicted Excipients for Lysozyme Under Thermal Stress

| Rank | Excipient | Predicted Activity Remain (%) | Chemical Class | Rationale (AI Feature Importance) |

|---|---|---|---|---|

| 1 | Trehalose | 92 | Sugar | High feature weight for 'hydrophilic interaction' |

| 2 | Sucrose | 89 | Sugar | Similar to trehalose, slightly lower predicted stability |

| 3 | L-Arginine HCl | 85 | Amino Acid | High weight for 'charged side chain' feature |

| 4 | Polysorbate 20 | 78 | Surfactant | High weight for 'surface tension reduction' |

| 5 | Glycerol | 75 | Polyol | Moderate weight for 'preferential exclusion' |

Protocol: Experimental Validation via High-Throughput Screening

Objective

To experimentally validate the top AI-predicted excipients using a Design of Experiments (DOE) approach in a high-throughput microplate format.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for High-Throughput Formulation Screening

| Item | Function | Example Product/Cat. No. |

|---|---|---|

| Target Enzyme | The therapeutic protein of interest. | Lysozyme (e.g., Sigma L6876) |

| AI-Predicted Excipients | Stabilizing agents for testing. | Trehalose, Sucrose, L-Arginine, etc. |

| Microplate (96/384-well) | Platform for high-throughput sample preparation and assay. | Corning 3650 (polypropylene) |

| Liquid Handling Robot | For precise, automated dispensing of buffers, enzymes, and excipients. | Beckman Coulter Biomek i5 |

| Microplate Centrifuge | To mix and degas formulations post-dispensing. | Eppendorf PlateFuge |

| Thermal Cycler with Gradient | To apply controlled thermal stress to multiple formulations simultaneously. | Bio-Rad T100 |

| Microplate Spectrophotometer | To measure enzyme activity (kinetic or endpoint) directly in plates. | Molecular Devices SpectraMax |

| Dynamic Light Scattering (DLS) Plate Reader | To measure particle size and aggregation in situ. | Wyatt Technology DynaPro Plate Reader |

Detailed Experimental Procedure

- DOE Design: Using software (e.g., JMP, Design-Expert), create a screening design (e.g., Fractional Factorial) for the top 5-6 excipients at two concentrations (e.g., low and high). Include control wells (enzyme alone, positive control).

- Formulation Preparation: In a 96-well plate, use a liquid handler to dispense buffer (e.g., 10 mM Histidine, pH 6.0) and stock solutions of excipients according to the DOE layout.

- Enzyme Addition: Add a fixed volume of target enzyme stock solution to each well. Mix thoroughly by pipetting or brief centrifugation.

- Stress Application: Seal the plate and subject it to a defined stress condition (e.g., 40°C for 24 hours) in a thermal cycler. Keep a reference plate at 4°C.

- Activity Assay: Post-stress, immediately assay enzyme activity. For lysozyme: Add Micrococcus lysodeikticus cell suspension to each well and monitor the decrease in absorbance at 450 nm for 5 minutes. Calculate initial reaction velocity.

- Stability Metric: For each formulation, calculate Percent Activity Retained = (Activitystressed / Activityunstressed_control) * 100.

Data Analysis & Feedback

- Analyze DOE results to identify significant excipient factors and interactions.

- Compare experimental results with AI predictions.

- Feed the new experimental data (excipient, condition, result) back into the training dataset to retrain and refine the AI model for future cycles.

Table 4: Experimental Validation Results for Lysozyme Formulations (40°C, 24h)

| Formulation | Trehalose (mM) | Sucrose (mM) | L-Arg (mM) | PS-20 (% w/v) | Experimental % Activity Retained | AI-Predicted % Activity | Deviation (Exp - Pred) |

|---|---|---|---|---|---|---|---|

| 1 | 100 | 0 | 0 | 0 | 90.2 | 92 | -1.8 |

| 2 | 0 | 100 | 0 | 0 | 86.5 | 89 | -2.5 |

| 3 | 0 | 0 | 50 | 0 | 81.0 | 85 | -4.0 |

| 4 | 50 | 50 | 25 | 0.01 | 94.7 | 88 | +6.7 |

| 5 (Control) | 0 | 0 | 0 | 0 | 65.0 | - | - |

Pathway Diagram: Excipient Stabilization Mechanisms

The following diagram summarizes key stabilization pathways targeted by AI-featurized excipients.

Title: Key Excipient Stabilization Pathways for Enzymes

This guide presents a robust, iterative framework that closes the loop between in silico AI prediction and in vitro experimental validation. By systematically integrating these steps, researchers can transition from a broad list of potential excipients to a verified, optimal formulation with greater speed and rationality than traditional methods, directly supporting the thesis of AI-driven advancement in enzyme formulation research.

This application note details a case study executed within the broader thesis research on AI-driven excipient selection for enzyme formulation. The objective was to develop a stable lyophilized (freeze-dried) formulation for a model enzyme, lactate dehydrogenase (LDH), using a machine learning (ML)-guided approach to identify optimal stabilizers and process conditions, thereby accelerating development timelines and improving success rates over traditional trial-and-error methods.

AI-Driven Excipient Screening & Predictive Modeling

Data Source: A proprietary dataset was constructed from historical formulation studies (80 entries) and augmented with data mined from published literature on protein lyophilization using NLP techniques (40 additional entries). Features included enzyme properties (pI, molecular weight), excipient types and concentrations (sugars, polyols, surfactants, buffers), process parameters (cooling rate, annealing temperature), and critical quality attributes (CQAs) like residual activity (%) and glass transition temperature (Tg').

AI Model & Outcome: A gradient boosting regressor (XGBoost) was trained to predict post-lyophilization activity recovery and long-term stability. The model identified key predictive features for LDH stability.

Table 1: Top Excipient Features Ranked by AI Model Feature Importance

| Excipient Feature | Feature Importance Score | Predicted Primary Function |

|---|---|---|

| Trehalose Concentration | 0.32 | Bulking agent & water substitute |

| Sucrose Concentration | 0.28 | Cryoprotectant & lyoprotectant |

| Presence of Poloxamer 188 | 0.15 | Surfactant (prevents surface adsorption) |

| Histidine Buffer Concentration | 0.12 | Stabilizing pH control |

| Cooling Rate during Freezing | 0.08 | Controls ice crystal size & stress |

| Dextran 40 Presence | 0.05 | Bulking agent & stabilizer |

Based on model predictions, a candidate formulation was proposed for experimental validation.

Table 2: AI-Proposed Candidate Formulation for LDH

| Component | Function | Proposed Concentration |

|---|---|---|

| LDH (model enzyme) | Active Pharmaceutical Ingredient | 1.0 mg/mL |

| Trehalose Dihydrate | Lyoprotectant / Bulking Agent | 50 mM |

| Sucrose | Lyoprotectant | 20 mM |

| Histidine-HCl | Buffer | 10 mM, pH 6.8 |

| Poloxamer 188 | Surfactant | 0.005% w/v |

Experimental Protocols for Validation

Protocol 3.1: Formulation Preparation & Lyophilization

Objective: Prepare the AI-proposed formulation and lyophilize using optimized parameters. Materials: Lactate Dehydrogenase (from rabbit muscle), trehalose dihydrate, sucrose, L-histidine hydrochloride, Poloxamer 188, ultrapure water. Procedure:

- Buffer Preparation: Dissolve histidine-HCl in 80% of the final volume of water. Adjust pH to 6.8 using 1M NaOH.

- Excipient Solution: In the histidine buffer, dissolve trehalose and sucrose with gentle stirring. Add Poloxamer 188 and stir until clear.

- Enzyme Addition: Add the required amount of LDH powder to the excipient solution. Gently swirl to dissolve. Avoid vortexing.

- Final Adjustment: Bring to final volume with pH-adjusted histidine buffer. Filter sterilize using a 0.22 µm PES syringe filter.

- Fill: Aseptically aliquot 1.0 mL into sterile 3 mL glass lyophilization vials. Partially stopper with lyo-caps.

- Lyophilization:

- Freezing: Load vials onto pre-cooled shelf (-45°C). Hold for 2 hours.

- Primary Drying: Apply vacuum (100 µBar). Ramp shelf temperature to -25°C over 2 hours and hold for 40 hours.

- Secondary Drying: Ramp shelf temperature to +25°C over 5 hours and hold for 10 hours at 50 µBar.

- Stoppering: Under vacuum, fully stopper vials using the shelf hydraulic system.

- Sealing: Apply aluminum crimp seals.

Protocol 3.2: Post-Lyophilization Activity Assay

Objective: Quantify the recovery of enzymatic activity post-reconstitution. Materials: Reconstituted LDH formulation, NADH, sodium pyruvate, potassium phosphate buffer (pH 7.5), UV-transparent microplate or cuvette, spectrophotometer. Procedure:

- Reconstitution: Reconstitute one lyophilized vial with 1.0 mL of sterile water. Invert gently 10 times.

- Assay Mixture: Prepare a master mix containing 50 mM potassium phosphate buffer (pH 7.5) and 0.2 mM NADH.

- Kinetic Measurement: Pipette 980 µL of master mix and 20 µL of reconstituted LDH into a cuvette. Mix by inversion.

- Initiate Reaction: Add 10 µL of 10 mM sodium pyruvate to the cuvette, mix rapidly, and place in spectrophotometer.

- Measurement: Monitor the decrease in absorbance at 340 nm (A340) due to NADH oxidation for 2 minutes at 25°C.

- Calculation: Calculate activity using the slope of the linear decrease (ΔA340/min) and the molar extinction coefficient for NADH (ε = 6220 M⁻¹cm⁻¹). Compare to the activity of an equivalent fresh liquid LDH sample (control). Activity Recovery (%) = (Activitypostlyo / Activity_control) * 100.

Protocol 3.3: Accelerated Stability Study

Objective: Assess the formulation's stability under stress conditions. Materials: Sealed lyophilized vials, stability chambers, activity assay reagents. Procedure:

- Storage: Place sealed lyophilized vials under two conditions:

- Condition A: 5°C ± 3°C (refrigerated control).

- Condition B: 40°C ± 2°C / 75% RH ± 5% RH (accelerated stress).

- Sampling: Remove triplicate vials at time points: 0, 1, 2, 4, and 8 weeks.

- Analysis: Reconstitute each vial and perform the activity assay (Protocol 3.2).

- Data Analysis: Plot residual activity (%) vs. time for each condition. Determine the apparent degradation rate constant.

The AI-proposed formulation was experimentally prepared and lyophilized. Its performance was compared to a standard sucrose-only formulation and a fresh liquid control.

Table 3: Experimental Results of AI-Proposed Formulation vs. Control

| Quality Attribute | AI Formulation (Proposed) | Standard Control (Sucrose Only) | Acceptance Target |

|---|---|---|---|

| Post-Lyophilization Activity Recovery (%) | 98.2 ± 1.5 | 85.4 ± 3.2 | >90% |

| Reconstitution Time (seconds) | < 30 | < 30 | < 60 |

| Cake Appearance | Elegant, intact cake | Minor shrinkage | Intact, pharmaceutically elegant |

| Residual Moisture Content (% by KF) | 0.8 ± 0.2 | 1.5 ± 0.3 | < 2.0% |

| 8-Week Activity @ 5°C (%) | 97.5 ± 1.0 | 88.1 ± 2.5 | >95% |

| 8-Week Activity @ 40°C/75% RH (%) | 92.3 ± 1.8 | 70.5 ± 4.1 | >85% |

Visualizations

Diagram 1: AI-Driven Formulation Development Workflow

Diagram 2: Enzyme Stabilization & Degradation Pathways

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Materials for AI-Driven Lyophilization Studies

| Item / Reagent | Function / Role in Research | Example Supplier/Catalog |

|---|---|---|

| Lactate Dehydrogenase (LDH) | Model thermolabile enzyme for stability studies. | Sigma-Aldrich, L2500 |

| Trehalose Dihydrate | Non-reducing disaccharide; primary lyoprotectant that vitrifies, replacing water hydrogen bonds. | MilliporeSigma, 90210 |

| Sucrose | Lyoprotectant and cryoprotectant; stabilizes protein native state during drying. | Avantor, 4108-01 |

| Histidine-HCl Buffer | Provides stable pH environment near enzyme's optimal pH, minimizing deamidation. | Thermo Fisher, AAJ61830AK |

| Poloxamer 188 (Pluronic F-68) | Non-ionic surfactant; minimizes air-water interface-induced denaturation during processing. | BASF, 62000801 |

| DSC Instrument | Measures critical temperatures (Tg', Tc) of formulation during freezing for process optimization. | TA Instruments, Q2000 |

| Lyophilizer (Bench-top) | Provides controlled freezing, primary & secondary drying for sample preparation. | Labconco, FreeZone 4.5L |

| Microplate Spectrophotometer | Enables high-throughput kinetic activity assays for rapid data generation. | BioTek, Synergy H1 |

| Python ML Libraries (scikit-learn, XGBoost) | Core tools for building predictive models for excipient performance. | Open Source |

| Electronic Lab Notebook (ELN) | Centralized, structured data capture essential for training AI models. | Benchling, IDBS ELN |

Beyond Prediction: Using AI to Diagnose Failures and Optimize Formulation Design

1. Introduction Within AI-driven excipient selection for enzyme formulation research, predictive models can identify stabilizing excipients but often act as "black boxes." Interpreting these models via feature importance is critical for root-cause analysis of predicted instability, transforming predictions into mechanistic, actionable insights for formulation scientists.

2. Core Concepts: Feature Importance Methods

Table 1: Common Feature Importance Interpretation Methods

| Method | Description | Use Case in Formulation | Key Output |

|---|---|---|---|

| SHAP (SHapley Additive exPlanations) | Game theory-based; assigns each feature an importance value for a specific prediction. | Explaining individual prediction of poor stability for a specific enzyme-excipient combination. | SHAP values (positive/negative contribution per feature). |

| Permutation Importance | Measures score decrease when a single feature is randomly shuffled. | Identifying which formulation features globally most impact model stability predictions. | Importance score (drop in model performance). |

| Partial Dependence Plots (PDP) | Shows marginal effect of a feature on the predicted outcome. | Understanding the non-linear relationship between pH or ionic strength and predicted stability score. | 2D plot of feature value vs. predicted outcome. |

| Local Interpretable Model-agnostic Explanations (LIME) | Approximates complex model locally with an interpretable model (e.g., linear). | Providing a post-hoc, intuitive explanation for a single complex prediction. | Simplified local model with coefficients. |

3. Application Protocol: Root-Cause Analysis Workflow

Protocol 3.1: Integrated AI Interpretation for Excipient Selection Failure Analysis Objective: Diagnose the root cause(s) when an AI model predicts poor long-term stability for a novel enzyme formulation with a candidate excipient library. Materials: Trained regression/classification model (e.g., gradient boosting, random forest), formulation dataset (features: enzyme properties, excipient types/concentrations, process conditions, stability metrics), SHAP/LIME libraries. Procedure:

- Prediction & Flagging: Input candidate formulation parameters into the AI model. Flag all formulations predicted to fall below stability thresholds (e.g., <90% activity after 6 months).

- Global Analysis: Compute permutation importance across the entire dataset. Rank features (e.g., "excipient A concentration," "lyophilization cycle ramp rate") by their overall impact on stability prediction.

- Local Interpretation: For each flagged unstable formulation, calculate SHAP values.

- Identify top 3-5 features with the largest negative SHAP values (primary instability drivers).

- Examine interaction effects using SHAP interaction values (e.g., between "buffer species" and "storage temperature").

- Mechanistic Hypothesis Generation: Map high-importance features to known biochemical/physicochemical principles (e.g., high negative SHAP for "surfactant concentration" may indicate interfacial denaturation risk).

- Validation Loop: Design a minimal experimental set (3-5 formulations) to perturb the identified root-cause features. Feed results back to retrain and refine the AI model.

4. Case Study: Interpreting a Lysozyme Excipient Model A gradient boosting model was trained to predict residual activity of lysozyme after accelerated stability testing based on 15 formulation features.

Table 2: Top Feature Importances from Model Interpretation

| Feature | Permutation Importance (Score Drop) | Typical Negative SHAP Value Context (for Unstable Prediction) | Proposed Root-Cause Mechanism |

|---|---|---|---|

| Trehalose:Protein Molar Ratio | 0.42 | Ratio < 500:1 | Insufficient vitrification & water replacement. |

| Primary Drying Temperature | 0.31 | Temperature > -15°C | Collapse during lyophilization, reducing reconstitution. |

| Presence of Surfactant (Polysorbate 80) | 0.28 | Concentration > 0.01% w/v | Introduction of hydrophobic interfaces, promoting aggregation. |

| pH of Bulking Solution | 0.19 | pH > 6.5 | Deviation from protein pI, increasing conformational flexibility. |

| Lyophilization Cycle Ramp Rate | 0.15 | Ramp Rate > 1°C/min | Inhomogeneous drying, inducing mechanical stress. |

5. The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Materials for AI-Driven Formulation Research

| Item | Function in AI Interpretation Workflow |

|---|---|

| High-Throughput Stability Assay Kits (e.g., fluorescence-based aggregation probes, activity assays) | Generate rapid, quantitative stability labels for training and validating AI models. |

| Design of Experiment (DoE) Software | Creates optimal formulation matrices for generating balanced, information-rich training data. |

SHAP/LIME Python Libraries (shap, lime) |

Core computational tools for calculating and visualizing feature contributions. |

| Forced Degradation Study Materials (e.g., temperature/humidity chambers, light sources) | Induce controlled instability to populate AI training data with failure modes. |

| Protein Characterization Suite (DSC, DLS, FTIR) | Provides ground-truth biophysical data to corroborate AI-identified instability mechanisms. |

6. Visualizing the Interpretation Workflow

(Title: AI Interpretation to Experiment Workflow)

(Title: From AI Feature to Corrective Action)

Within AI-driven excipient selection for enzyme formulation research, predictive models must identify stabilizers that maintain enzymatic activity under stress. This application is highly sensitive to model reliability. Overfitting leads to non-generalizable excipient recommendations, data bias skews selection towards historically used but suboptimal compounds, and poor interpretability hinders scientific validation and adoption. These pitfalls directly compromise formulation efficiency and success rates in drug development.

Application Notes & Protocols

Pitfall: Overfitting

Description: Model learns noise and spurious correlations from the limited, high-dimensional datasets typical in formulation science (e.g., spectral data of excipient-enzyme mixtures), failing on new chemical scaffolds.

Diagnosis Protocol:

- Data Partitioning: Split dataset (e.g., excipient property library + enzyme stability outcomes) into: Training (70%), Validation (15%), Hold-out Test (15%). Ensure splits are stratified by enzyme class.

- Learning Curve Analysis: Train model on incrementally larger training subsets. Plot performance (e.g., RMSE on prediction of % activity remaining) against training and validation set sizes.

- Key Metrics: A diverging gap between training and validation performance indicates overfitting. Monitor metrics in Table 1.

Mitigation Protocol:

- Method: Implement k-fold Cross-Validation (CV) with Early Stopping for Neural Networks.

- Procedure:

- Divide the training dataset into k=5 or k=10 folds.

- For each fold iteration, train on k-1 folds, validate on the remaining fold.

- For neural networks, monitor validation loss each epoch. Halt training when validation loss fails to improve for 10 consecutive epochs (patience=10).

- Regularize via L2 regularization (weight decay=1e-4) and Dropout (rate=0.5 for dense layers).

- Final model is retrained on the entire training set for a number of epochs equal to the median optimal epoch from CV.

Table 1: Quantitative Indicators of Overfitting

| Metric | Acceptable Range | Overfitting Indicator | Typical Value in Stable Excipient Model |

|---|---|---|---|

| Train vs. Val RMSE Gap | < 15% | > 25% | 8% |

| Cross-Validation Std Dev | < 10% of mean CV score | > 15% of mean CV score | 5.2% |

| Model Complexity (# params) | Appropriate for dataset size (n/10 rule) | Params >> number of samples | 50k params for 5k samples |

Visualization: Overfitting Diagnosis Workflow

Title: Overfitting Diagnosis and Mitigation Workflow

Pitfall: Data Bias

Description: Historical formulation datasets are biased towards common excipients (e.g., sucrose, trehalose, polysorbates), underrepresenting novel polymers or natural extracts, leading to models that reinforce the status quo.

Diagnosis Protocol:

- Data Audit: For each feature (e.g., excipient molecular weight, logP, functional groups), calculate statistical disparity (mean difference, KL divergence) between the distribution in the full dataset versus the subset associated with successful formulations.

- Outcome Analysis: Calculate prevalence of successful outcomes per excipient class. Flag classes with very high/low success rates disproportionate to their chemical diversity.

- Synthetic Minority Evaluation: Train model, then evaluate performance on a held-out set containing artificially upsampled rare excipient classes.

Mitigation Protocol:

- Method: Bias-Aware Sampling & Adversarial Debiasing.

- Procedure:

- Stratified Sampling: Ensure mini-batches during training contain proportional representation from all excipient chemical classes (e.g., sugars, polyols, surfactants, amino acids).

- Adversarial Debiasing (Algorithmic): Implement a dual-network architecture.

- Primary Network: Predicts formulation success.

- Adversary Network: Attempts to predict the excipient class from the primary network's learned embeddings.

- Train the primary network to maximize prediction accuracy while minimizing the adversary's accuracy (gradient reversal layer), forcing it to learn features invariant to excipient class bias.

Table 2: Audit of Potential Data Bias in an Excipient Library

| Excipient Class | % in Total Dataset | % in Successful Formulations | Disparity Ratio | Risk Level |

|---|---|---|---|---|

| Sugars (Disaccharides) | 42% | 68% | 1.62 | High |

| Amino Acids | 18% | 15% | 0.83 | Low |

| Novel Synthetic Polymers | 8% | 2% | 0.25 | Critical |

| Natural Surfactants | 12% | 9% | 0.75 | Medium |

Visualization: Adversarial Debiasing Architecture

Title: Adversarial Debiasing Network Architecture

Pitfall: Model Interpretability

Description: "Black-box" models (e.g., deep neural networks) provide no insight into why an excipient is predicted to be stabilizing, hindering scientific trust and hypothesis generation.

Interpretation Protocol:

- Method: SHAP (SHapley Additive exPlanations) Analysis for Feature Importance.

- Procedure:

- Train your best-performing model (e.g., gradient boosting machine or neural network).

- Using the SHAP library (KernelExplainer or TreeExplainer), compute Shapley values for each prediction on the test set.

- Global Interpretability: Plot a summary bar chart of mean absolute SHAP values across the dataset to see which excipient features drive overall model predictions (e.g.,